by Lily Bui

ListenTree is an audio-haptic display meant to be embedded in the natural environment. A visitor to the installation notices a faint sound appearing to emerge from a tree, and might feel a slight vibration under their feet as they approach. By resting their head against the tree, they are able to hear sounds through bone conduction. To create this effect, an audio exciter-transducer is weatherproofed and attached to the tree trunk underground, transforming the tree into a living speaker that channels audio through its branches. The apparatus, set up underground at the base of a tree, transforms the tree into a loudspeaker. The exhibit also uses Raspberry Pi to connect to WiFi, which enables one tree to communicate with other trees, other sound sources, or other transducers.

While the initial intent of the producers was to create an audio installation, they have also found other uses cases for ListenTree as a platform for other stories, given that any source of sound can be played through the tree, including pre-recorded or live tracks. For example, during an installation in Mexico City, ListenTree was used to play pre-recorded audio of poems by Mexican poets at a Dia de los Muertos (Day of the Dead) event. Glorianna Davenport, who oversees a project called The Living Observatory, which tracks the recovery of the wetlands at Tidmarsh Farms, has also used ListenTree as a platform to play audio recorded at Tidmarsh. This particular installation of the ListenTree can be found at the MIT Museum. “A very important benefit of [the interaction] is the juxtaposition of physically being here and connecting to a remote site,” remarks Davenport. The ListenTree can also be expanded to include multiple trees in a single installation, for which Davenport sees much potential: “It is important that the basic idea is later expanded. If you look at the installation in Mexico, it is clear that having many trees, and having the installation outdoors at events that had large audiences changes the dynamic.”

Storytellers and documentarians might conceive of other ways to sonify stories through ListenTree, which is able to play sounds collected remotely through a local, natural interface; combine the interaction between a story and its medium to aid in meaning construction; and reconcile the immateriality of digital media with a materiality of a physical exhibit.

- (from website) How ListenTree works

Background/inspiration

Edwina Portocarro, an MIT Media Lab researcher one of the main producers for the ListenTree project, drew inspiration for the display through a collaboration with colleague Gershon Dublon for the Media Lab’s Members’ Week, a week during which various Media Lab groups demo their current work. Gershon had been working with the Responsive Environments group to bring urban audiences into contact with the environmental restoration of Tidmarsh Farms in Massachusetts, owned and managed by Media Lab member Glorianna Davenport. The idea to build the display into a tree came from a conversation that Gershon and Edwina had about how telephone poles, which are built from tree trunks, are used as conduits for communication. ListenTree returns to this idea by using the materiality of the tree to tell stories. The project is also an experiment with sensor-based storytelling. Given that audiences have found the display to be “magical, yet understated,” Edwina sees potential with sensor-based storytelling as a way to better communicate vital signs of remote environments (e.g. their aforementioned work with creating a remote representation of Tidmarsh Farms). Edwina reflects: “Sensors are not lying. They’re telling you what the environment is about. Stories are more manipulated and twisted to emphasize certain things and not other things. We’re trying to put together a cohesive and elegant story using sensors and storytelling.” It would also be possible to attach a microphone to the display in order to make the participant either a receiver or creator of content, meaning that networked ListenTree displays in different locations could feed/source information to/from each other.

Producers

Edwina Portocarrero is a PhD Candidate at MIT’s Media Lab. She designs hybrid physical/digital objects and systems for play, education and performance. She previously studied lighting and set design at Calarts. An avid traveler, she has lived in Brazil working at a documentary production house, as lighting designer in her native Mexico, hitchhiked her way to Nicaragua, lived in a Garifuna village in Honduras, documented the soccer scene in Rwanda and honed a special skill for pondering after sitting still for hundreds of hours while modeling for world-renowned artists. Currently telepresence and telepistemiology, cognitive development and what is play? in the 21st century occupy her mind while trying to imagine and reinvent the playgrounds of tomorrow. Contact: edwina@media.mit.edu

Gershon Dublon is an artist, engineer and PhD student at the MIT Media Lab, where he develops new tools for exploring and understanding sensor data. In his research, he imagines distributed sensor networks forming a collective electronic nervous system that becomes prosthetic through new interfaces to sensory perception—visual, auditory, and tactile. These interfaces can be located both on the body and in the surrounding environment. Gershon received a MS from MIT and a BS in electrical engineering from Yale University. Before coming to MIT, he worked as a researcher at the Embedded Networks and Applications Lab at Yale, contributing to research in sensor fusion. Contact: gershon@media.mit.edu

Audience

In speaking with others about their reactions to LIstenTree, Edwina has found that many have “projected their own intentions for it.” For instance, others have suggested using ListenTree’s methodology to reach blind communities, parks & recreation communities, among many others. The platform is a flexible one that enables a multitude of content to be played through the display, and it is one that invites the active participation of audiences.

Key research ideas

In a paper submitted to the ICAD conference in New York, the producers outlined some of the key research ideas behind this project. ListenTree seeks to explore“ ambient interfaces” and “calm technologies, where interaction can occur in the user’s periphery, inviting attention rather than requiring it” much like the devices that we commonly wear or carry with us. In more setups, the technology is completely concealed underground, providing a display that is embedded in a natural environment. ListenTree’s method of delivering audio to the audience is also an experiment in audition through bone conduction, which occurs “when vibration is conducted through a listener’s skull and into the inner ear, bypassing the eardrum. One of the earliest examples of bone conduction apparatuses is attributed to Beethoven, who is said to have compensated for his hearing loss by attaching one end of a metal rod to his piano while holding the other between his teeth. Exciter transducers have been used in art (as well as increasingly in consumer products) for several decades. Despite their widespread use, by seemingly magically producing sound through bone conduction and from inside objects, transducers continue to draw audiences in.” Other examples of installations that use bone conduction are Laurie Anderson’s The Handphone Table, which allows participants to hear sound when they placed their elbows on a table and hands on their heads; Marcus Kison’s touched echo, which uses bone duction to reproduce sounds of a Dresden air raid; and Wendy Jacob’s installment which uses infrasonic and audible transducers to produce work for deaf students and collaborators.

Exhibits/installations:

Current and past installations include RIDM in Montreal; the National Center for the Arts in Mexico City; SXSW in Austin, TX; the ICAD conference in New York; and the MIT Museum in Cambridge, MA. Installations in Spain and South Korea are slated for the near future.

In Mexico City, ListenTree was used to play pre-recorded audio of poems by Mexican poets at a Dia de los Muertos (Day of the Dead) event. At the MIT Museum, the ListenTree plays a soundtrack of Tidmarsh Farms, a recovering wetlands region. Tidmarsh is part of a larger initiative called the Living Observatory, a collection of projects that enables audiences to witness, monitor, and experience the changing land of a twenty-year period. Living Observatory is run by Glorianna Davenport, who believes in expanding the potential of ListenTree: “It is important that the basic idea is later expanded. If you look at the installation in Mexico, it is clear that having many trees, and having the installation outdoors at events that had large audiences changes the dynamic.” The Living Observatory also follows a Creative Commons approach to all data: “We have a data sharing agreement that allows anyone to use the data. People responsible for collecting data can specify, with our help, special use cases (ie. photography: if you put photographs in our archive, you will be credited.”

- ListenTree installation in Mexico City, MX

Technologies

Setup instructions: http://listentree.media.mit.edu/docs/ListenTree_Setup.pdf

“The ListenTree system consists of a single controller unit wired to multiple underground transducers, one per tree. The controller is designed to be a self-powered, self-contained plug-and-play module, adaptable to any tree. We use a 30-watt solar panel to charge a 12-volt, 12-amp-hour battery. In our testing, power consumption varies significantly depending on the audio source, but rarely exceeds 20 watts. Currently, duty cycling is sometimes required to conserve power, though we intend to incorporate motion sensing to solve this problem in the future. Computation and wireless network connectivity is delivered by an embedded computer running Arch Linux and Python for web-based control. We have successfully used both Raspberry Pi and BeagleBone Black model computers, and have come to prefer the latter for its stability, more efficient power regulation, and enhanced performance. Audio signals for each tree are generated on the computer and output through a USB sound card and a 20-watt stereo audio amplifier. Weather-proof connectors (Switchcraft EN-3) on the control module lead to buried speaker cables, which run underground to the transducers. We chose the Dayton Audio BCT-2, a model designed for bone conduction, as it best balanced size, efficiency, and mechanical interface. In many applications besides bone conduction, transducers are affixed to objects using adhesive rings, and are designed to produce audible sound rather than silently conduct vibration. In contrast, bone conduction transducers are usually pressed against the skull using a soft rubber interface to dampen their audible sound. We replaced the rubber interface on the BCT-2 with a hanger bolt so that the assembly could be screwed into the tree approximately 1.5 inches. The transducers were cast in silicone rubber to protect them from the elements. In our testing, 2 transducers remained functional after being underground for nearly 9 months, through the difficult conditions of Boston winter.”

- ListenTree controller next to exhibit in Mexico City, MX.

Techniques

When asked how she might describe this type of documentary, Edwina responds, “We thought of it more as an auditory display in some ways, but it was also embedded in the natural environment.” It becomes an interactive medium in the sense that audiences would inevitably have to interact with the tree display itself to experience the recordings played through it.

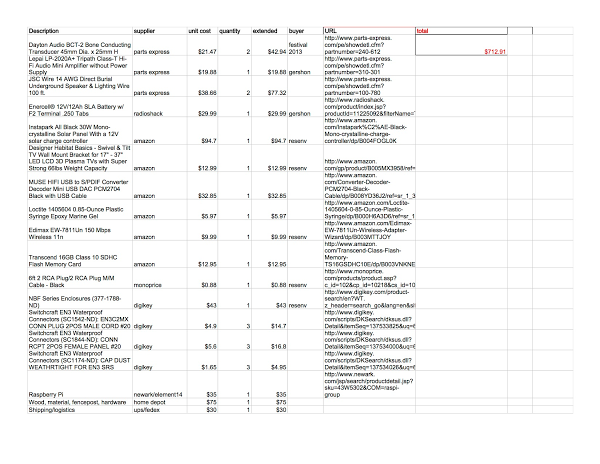

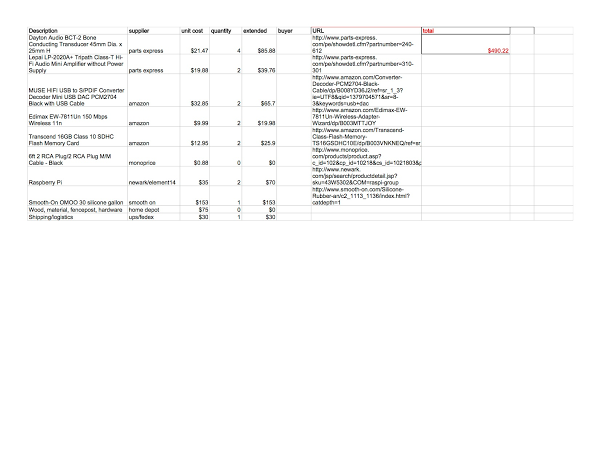

Budget range: ~$490 to ~$713

- Outdoor installation budget (~$713) / Indoor installation budget (~$490)

Future work

The producers write in their ICAD paper about what they hope to accomplish in future versions of ListenTree: “Many of our ideas rely on developing a method for user touch input; currently we are experimenting with swept-frequency capacitive sensing as in. We have had some success, especially indoors where the tree’s electrical ground can be isolated, but more work is required. For power savings, we intend to add motion sensors to the controller.”

Funders: MIT Media Lab

URL: http://listentree.media.mit.edu

External links

WBUR feature: ListenTree

1 comments

Join the Discussion

Manimegalai Manimegalai

nice research.how electricity produced in multiples of living trees