London-based collective Marshmallow Laser Feast has emerged as one of the leading virtual reality creators in the recent years. The company employs their signature pointillist aesthetic in real-time VR experiences, and explore perspectives that are inaccessible to the human senses. Their first VR piece, In the Eyes of the Animal has been exhibited around the world at festivals, and their latest project Treehugger Wawona was awarded the 2017 Tribeca Storyscapes Award.

The Open Doc Lab’s project manager Beyza Boyacioglu met Ersin Han Ersin, the creative director of Marshmallow Laser Feast, for an interview during 2017 !f Istanbul Independent Film Festival.

Beyza: Can you tell me about yourself and Marshmallow Laser Feast?

Ersin Han: We are a small experiential studio from London working in the intersection of art and technology. We often work on commercial projects in order to fund our art commissions. We are three directors and we’ve got a small team of people in London as well as a big circle of freelancers all around the world.

I’ve studied visual communication design in Turkey and then went to London six years ago and did my masters on computational studio arts, which wasn’t so good and I stopped doing it and started doing other stuff. And the virtual reality happened three and a bit years ago, which was the perfect medium for us to start exploring because we were already working on immersive mediums like room scale projection mappings, interactive installations, light installations…

Beyza: What’s the background of other people in your team?

Ersin Han: Barney and Robin co-founded the studio and then I jumped on board. Barney is coming from a photography background but he has done lots of 360 photos before virtual reality, before forming the company as well. Robin studied film ,TV and interactive media and then started working as a director for interactive DVDs and new media.

Beyza: What’s the percentage between your commercial and passion projects?

Ersin Han: So, the dream is 50-50. But it’s not really 50-50 all the time because some of the commercial projects take too long. But the dream is, obviously, in a kind of declining fashion losing most of the commercial projects or losing one or two in a year. And spending the rest of the time doing just passion projects, such as In the Eyes of the Animal or Treehugger.

Beyza: Can you talk about the funding model for your passion projects?

Ersin Han: We often come up with the project idea, and then our in-house producers start looking for different funding opportunities such as art funding, institutions, or sometimes biennials or galleries. They come on board to fund one leg of the project. They’re often quite small. The part that we fund ourselves is often not to pay ourselves.

Beyza: Let’s talk about In the Eyes of the Animal.

Ersin Han: So, In the Eyes of the Animal is a virtual reality project. Well, it’s actually a mixed reality project because there are other elements than the virtual reality head-sets. It is a project that puts you in the eyes of four different animals and insects in Grizedale Forest.

The idea of making a project came to us as an offer in 2015 from Forestry Commission and The Abandon Normal Devices Festival (AND). AND asked us to do a project for their 2015 festival that happens in Grizedale Forest which has a sculpture park.

We went to the site visit and came up with the idea of doing a virtual reality project that focuses on the perception of other inhabitants of the same forest and maybe visitors can see the forest not just from their own point of view but also from the point of view of different animals and insects that lives there. So we start working with this idea and talked to Salford University and Natural History Museum in London to provide us with a bit more insight in how those species perceiving their world, their surroundings. And then we started building a really straight-forward narrative based upon the food chain.

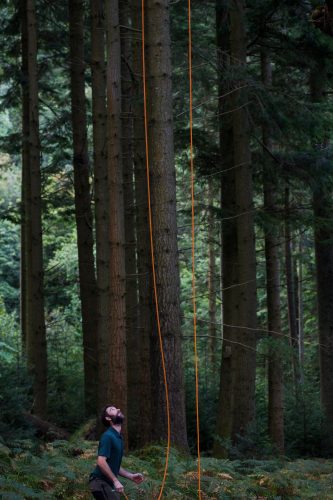

The Abandon Normal Devices Festival 2015 – Courtesy of Luca Marziale, Sandra Ciampone, Marshmallow Laser Feast

“Tree surgeon” choosing a location for the headsets that is safe for the trees. The Abandon Normal Devices Festival 2015 – Courtesy of Luca Marziale, Sandra Ciampone, Marshmallow Laser Feast

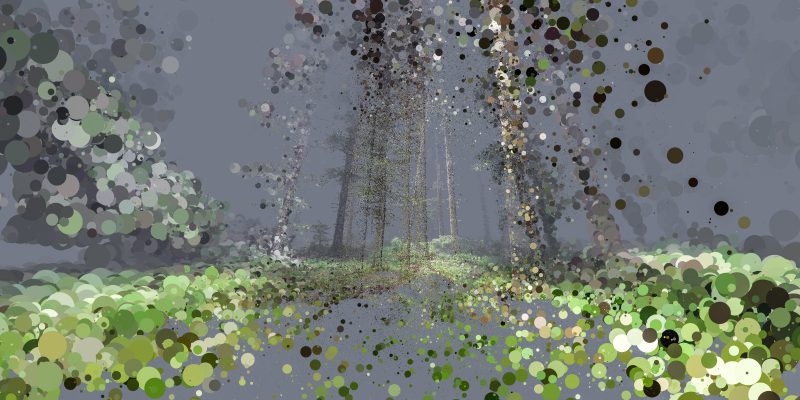

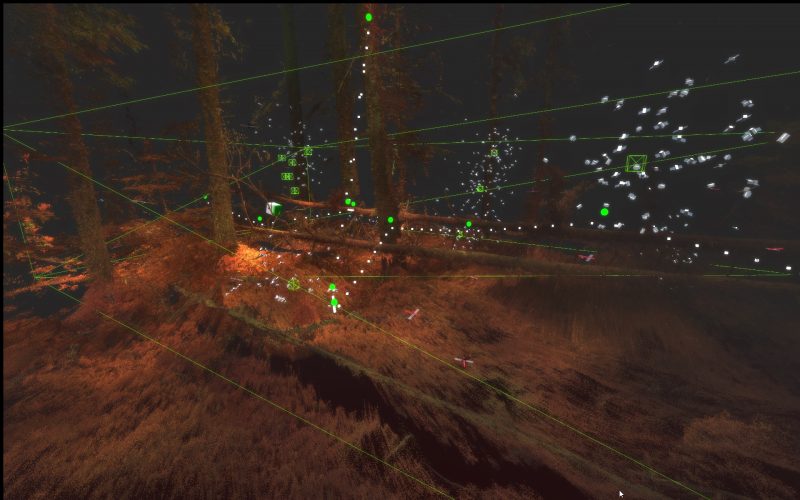

You walk in the forest as a human with your own perception and by the time you arrive to that random spot in the forest, you see those tangled weird headsets of a tree. And when you put the headset on, we take away your perception and put you in the eyes of a mosquito, which sees CO2. So you’re flying through this forest that you can see it’s doing photosynthesis with all the colors and everything–all the layers that aren’t available to us but only to mosquitoes.

Still from In the Eyes of the Animal – Courtesy of Marshmallow Laser Feast

Then you get eaten by a dragonfly, which sees 300 frames per second and, obviously, everything is slow motion for them. They see full spectrum of lights and you start seeing these prays quite significantly either dark or brighter than the background. Which we believe is how they differentiate all the other flying things.

But again, this is a speculation project. We take those scientific facts and apply it to a visual narrative that is completely speculative.

Beyza: You have this great story about the dragonfly. Can you share it again?

Ersin Han: So dragonfly–it’s fascinating. Dragonflies took the earth’s skies 400 million years ago. Their wingspan was almost two meters just a few million years ago. They were the most accomplished fliers in the entire animal kingdom. They can fly in any direction using their head as a pivot point. They can actually differentiate ultraviolet, infrared, and microwaves. That means they can see full-spectrum of light as well as processing this information in 360. On top of that, their brain–that small brain compared to their body–can project the trajectory of their preys. Therefore, they fly to that point and catch them. Their hit rate is around 96% , which is one of the highest in the entire animal kingdom.

Still from In the Eyes of the Animal – Courtesy of Marshmallow Laser Feast

But this is not just a visual experience that you put yourself in the eyes of a dragonfly. We’ve got the subpack, it’s a tactile element that vibrates just on your back, which gives you the wings or the idea or the feeling of the wings. So you feel immersed and probably this is how this would feel to have wings. Again, this is another speculation.

Dragonfly gets eaten by a frog. We wanted to focus on the species living in Grizedale Forest but then we found some really interesting features of other frogs around the world. So we started combining some physiological features across the family of same specimen.

Still from In the Eyes of the Animal – Courtesy of Marshmallow Laser Feast

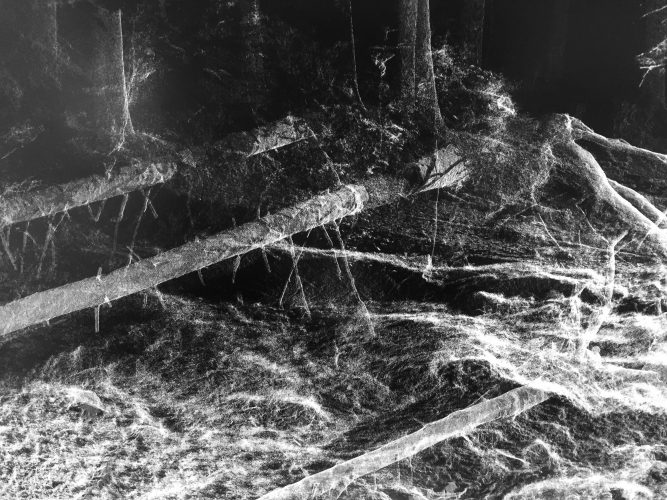

The Tungara frogs, which are from Mexico, they can echolocate pairs through ribbitting. As they ribbit, the sonar waves propagate from their body, they hit to the environment around them and then bounce back. And they can sense through their bodies what’s going on in 3-dimensional world. On top of that, they match this data with the ribbitting of their pairs, so they can assess where their pairs are because they are almost virtually blind. So, it’s kind of seeing them from a distance–they feel it. But the funny thing is, they produce so much noise, they make themselves easy targets to bats.

Also for an owl, a source that is creating lots of ripples in the water surface as well as producing sound, is great because owls have got those ears. They can focus on different parts of the forest to listen and then use their, almost binocular, eyes to see where the prey is. And they fly almost silently and come and grab the frog. But the funny thing is, the physiology of their eyeballs is almost like this egg shape. So their peripheral vision is believed to be quite abstract. Obviously, we can never know whether down the line of evolution, their brain starts correcting this physiological deformation.

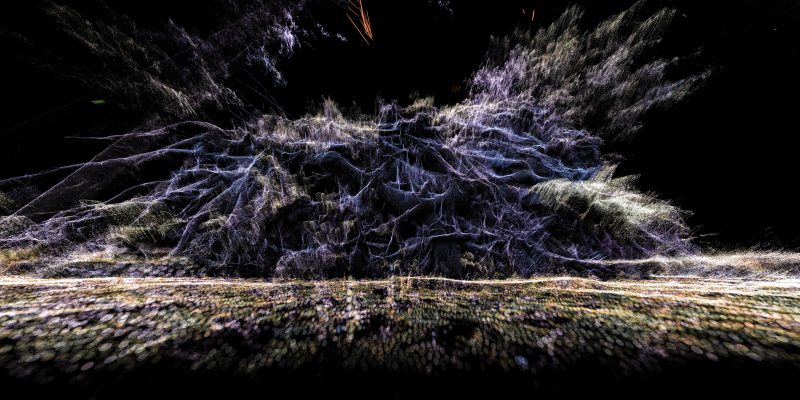

Still from In the Eyes of the Animal – Courtesy of Marshmallow Laser Feast

We took this as a fact and applied it to our point cloud data, which we are manipulating in real time, and we make you behave like an owl. Only the central vision is focused, and when you turn your head around, then every other part that you are looking at becomes more sharper. Then you explore like an owl.

The idea is obviously, the owl gets shot by a hunter, which is you when you take off your headset. That’s your vision.

Beyza: Can you talk about the technology?

Ersin Han: This is another part of how we are utilizing the commercial projects to do passion projects. A project we did in early 2015 was a car commercial that was about visualizing how cars sense their environment. We decided to scan the environment and projection map that data around the car so you get this pointilized aesthetic. That opportunity gave us enough time and resources to build our own pipeline and toolkit that also allowed us to do our passion project quite easily. Without that, it would’ve been probably way more difficult.

Lidar is a technology that’s been around for sixty years that became a mandatory tool for architects to easily create a 3D representation of a space. We have used this technology to scan the perfect representation of that forest.

Beyza: So, it’s not an image but it’s data.

Ersin Han: Exactly. We often call it digital fossils because if that forest is gone, we still keep the data of that forest quite accurately. And this is something we’re proud of actually because we are almost doing a digital library of, a catalogue of these spaces in our harddrives, which we are planning to open source in the near future.

Beyza: Can you walk us through the process of putting the data together to make it resemble the forest?

Ersin Han: The process obviously starts in the forest. You have to scan from multiple positions because of the occlusion. So you need to scan objects from almost 360 if you wanna get a 360 representation from every angle. That takes almost a day, sometimes two, to scan a large space.

Lidar scanner – Courtesy of Luca Marziale, Sandra Ciampone, Marshmallow Laser Feast

Then you take that data into a software of your choice, we often use point tools or faro’s scene, which you put all the data, it processes the information and in a few hours you’ve got completely stitched 3D data. Scanner also takes photos in 360. Then it projects those photos onto the point cloud which gives you perfect representation as well as the color of the space.

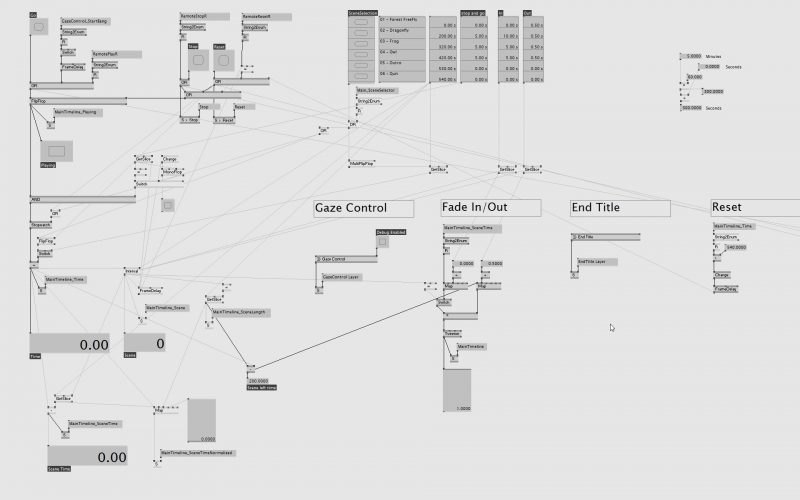

Software screengrab – Courtesy of Natan Sinigaglia

Beyza: So the Lidar collects data but it also takes photographs.

Ersin Han: Exactly but not from Lidar. It has a laser measure which fires around and measures it. And it does the same operation with a built-in camera to take photos in 360. Since the center point is exactly the same, they project that photo image–the photo data–on top of the three dimensional data to get the color as well as the three dimensional representation.

Beyza: Okay, so that’s color. How about the texture?

Ersin Han: Yes, comes from the photographs. Well, this is an interesting one because, like ten years ago, Lidar scanners couldn’t take any photos. There were no built-in cameras. People were often first scanning a space, taking the Lidar scanner off the tripod and putting a spherical photography kit. So you do another round of 360 photographs and then you stitch them together in a software.

Beyza: If you only have the data, it’s a cloud-map, like the ghost of a space.

Ersin Han: Exactly, exactly. It’s like a really accurate representation of the space without any color. Which is still useful, architects, engineers still use it for measurements.

Software screengrab – Courtesy of Natan Sinigaglia

For us, the color is really important. Even if you want to paint it, you need to know the source and then paint over it. When it comes to data type, it’s literally XYZ position of A point and then RGB representation of that very point. So you get those millions of points into our own software, which is based upon VVVV. It is node based multi-purpose tool that you can do many things. I mean, we use the same tool kits, same software, for light shows, robot control systems, as well as virtual reality and stitching or manipulating that kind of data.

Beyza: Is it a game engine?

Ersin Han: If you want to build a game engine, that’s [VVVV] also a tool that you can build a game engine on. It’s like a programming language based upon C#. But it gives all kinds of ready to use tools, as well as an open editor where you can write your own shaders or plug-ins as you like.

Screengrab from VVVV – Courtesy of Marshmallow Laser Feast

Beyza: In In the Eyes of the Animal, can we say you’re making a commentary on our human-centric vision of the world?

Ersin Han: That’s quite accurate actually. We call it “world beyond our senses.” The first thing we ask ourselves before we make any virtual reality or mixed reality project: Can you have this experience in any other medium? If you can do that, then there is no point in doing it in VR and then it starts from there.

In this particular project it was the main starting point that we don’t want to see the forest through the eyes of the human being, but put them in another species’ point of view. Which probably shifts the idea of the center of universe from us to another species which are not familiar. We do not do that in our day-to-day lives. So, yes. I mean, ideally, we borrow their heads, their perception, put it into something else’s perception. And that hopefully seeds some idea of how you can actually empathize; how you can be compassionate; or how you can be imaginative about things that you don’t know.

Beyza: Have you seen Daniel Steegmann’s Phantom?

Ersin Han: Right, so I haven’t seen it in the headset but I’ve seen documentation of it. Is it a piece of frame that’s black-and-white that you walk around?

Beyza: Yeah, that’s a very cool project. He is interested in this philosophy where the human becomes one with the forest. It’s about the idea of mimicry in animals but not from a Darwinist, evolutionary point of view; it’s not about survival, but it’s more about giving in to the allure of the space. You become one with the space because you’re seduced by your environment. When you look at yourself in VR, you don’t see yourself and I think that’s the best excuse I have seen for not seeing yourself in the project.

Ersin Han: Yeah, it’s great. You become, I think Felix said, a floating consciousness. This idea of taking your mind out of your body is quite fascinating, to stop thinking with your human knowledge, human senses, your human preconceptions. So you leave everything behind and it’s much easier to convince someone to feel something new.

Beyza: Let’s talk about Treehugger.

Ersin Han: Treehugger is the continuation of In the Eyes of the Animal. Although In the Eyes of the Animal is not finished because we want to do In the Eyes of the Bats, maybe In the Eyes of the Frog or In the Eyes of the Dolphin.

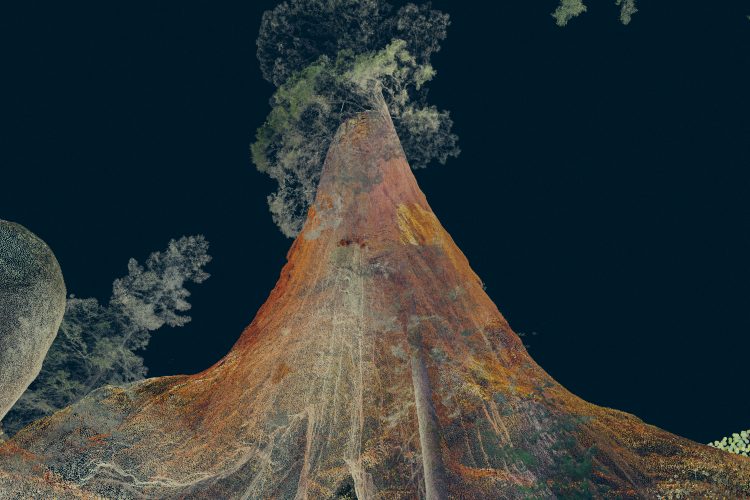

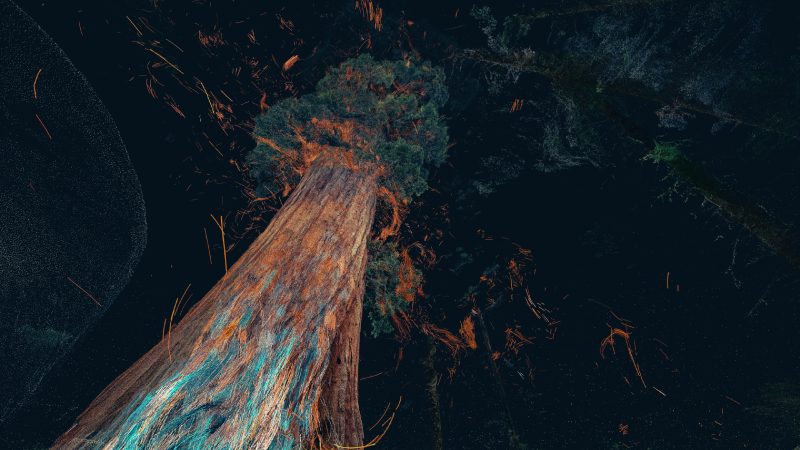

Still from Treehugger: Wawona – Courtesy of Marshmallow Laser Feast

Treehugger is about seeing the tree’s inner system of a tree by just looking at it. By just putting your head inside of a tree and seeing the journey of a single drop of water that is sucked by the root, pumped up to the canopy, and then you witness transpiration. You follow that journey and you can interact with every step of it. We started with the largest living individual things on the planet, which are the sequoias. Sequoia trees grow up to 120 meters. The humongous scale of them puts you, again, makes that shift of center of the universe from yourself to somewhere else; we reconsider our position and our take for this planet. This is the backbone of the whole journey but Treehugger is a multi-chaptered story; you can see the journey of water in a giant tree, or you can feel the time in another one.

Stills from Treehugger: Wawona – Courtesy of Marshmallow Laser Feast

We also scanned the Bristlecone Pine first in the White Mountains–the oldest living individual trees and they are like 5,650 years old. Sequoia trees are 3,500 years old. We are focusing on time aspect and our stupid perception of time. How stupid it is because, we don’t understand what it means, [like] a thing that is alive for 5,000 years. We are, again, speculating about this invisible world that is not available to our senses, not to our brain or not to our eyes, and playing with those interplay between my body today, and the body of another species that is 5,000 years old.

Scanning Sequoia trees – Courtesy of Marshmallow Laser Feast

So we’ve got a physical structure that is some form of a representation of a sequoia tree but they grow up to 15-20 meters in diameter sometimes. So there’s no way of really representing the sheer scale of it in an indoor gallery space. But we put a physical sculpture that you can feel when you, reach out and touch. You feel a tactile surface that is similar to the bark of a sequoia tree. And there are those holes in the sculpture that you can put your head inside and see the inner-systems from inside of the tree, which is not possible to anyone’s vision. We followed the same methodology of scanning those places, collecting that data, and manipulating that data in real time and adding some speculative elements based on, again, scientific facts such as how sequoia’s suck all that water.

Bark of the sequoia tree model – Courtesy of Marshmallow Laser Feast

Treehugger: Wawona installation – Courtesy of Marshmallow Laser Feast

Treehugger will be a multi-chaptered story of the endangered trees around the world. And the main question is: can we reconnect to nature with virtual reality? Or using mixed reality experiences, kind of creating intimate experiences to seed the idea of their lives, their importance; without them we can’t breathe.

But also, again, we work on multiple different projects at a time. We are, in one hand, looking into Sweet Dreams, which is a fine dining experience.

Beyza: Is it VR?

Ersin Han: It’s a virtual reality fine-dining experience.

Beyza: You eat while you’re wearing the headset?

Ersin Han: Yes, but you see completely different things. What is that relationship between what you are seeing and what you are eating, and how can you manipulate that?

It started with the idea of, do we taste in our dreams? If so, can we flavor them? So, it’s an interplay and a new area of exploration for us that’s going on, on the side. Hopefully next year it will be launching in The Fat Duck, the multi-Michelin star restaurant in the UK with the chef Heston Blumenthal.

Beyza: Who else is in your team?

Ersin Han: So, at the moment, we’ve got Bath University looking into Machine Learning driven Computer Vision techniques in order to track things that are deformable and ever-changing such as food. Because we need to have a new technology to get that accurate detailed representation.

Heston’s team, they have a whole creative team experimenting with fusion kitchen, looking into the relationship between shape, taste and the scent. He is also known for a cooking method he’s practiced for more than a decade called encapsulation, where he presents a small amount of flavor in a way that makes it taste much larger than it is. So we will explore our sensory relationship with food and drink, combining neurology and synesthesia, to tantalize the mouth and the mind.

Beyza: That sounds really interesting. Lastly, I have a speculation question: What should we expect from the future of VR?

Ersin Han: For ten years time, my personal wish is virtual reality can open a better space for us to understand everything around us that wasn’t available before. Therefore, we can empathize and build a better future. It’s really difficult to trust our naive perception of the future saying that everything will be great. From my point of view, we have to start talking about what kind of future we want from virtual reality and technology as well because we were not expecting anything from Facebook, it was a fun platform but we didn’t know that we were exchanging our privacy in order to connect with our elementary school friends. So, today we know this we’ve seen lots of crises around the world over surveillance. We didn’t ask those questions when Facebook was coming but now we have an opportunity. A new technology is emerging. It is more intimate than any other technology that has ever existed. And it is so easy to collect personal data from someone; where they’re looking, what their interests are, in virtual reality. So, I’d rather focus on those aspects than what the future will be.

But, from a completely techno-optimistic point of view, I hope in fifty years time we will have eyeball replacement. If not, contact lenses that can project the light fields of the different places on our eyes so that we don’t need to go to another country. On the flip side, I hope it will provide us better insight for nature and the things that we are destroying today.

Ten years time, probably every other person will have a form of virtual or mixed reality headsets either in their pockets or on their eyeballs.

Fifty years time, probably with the use of artificial intelligence embedded microprocessors, probably that technology will become invisible. But again, it’s really difficult to see through that fog of technological hype of virtual reality to assess.

Beyza: And in the coming years, what tools should we be looking out for?

Ersin Han: Everyone is looking at the magic leap to change the world and I think we all want that because it will improve our day-to-day lives. It’s not like just an experience or a medium that you can spend certain times with.

What they are aiming to do is… they are super secretive so they don’t have a proper statement. But based upon the articles, they are building a headset the size of your sunglasses that can project every color, as well as black, to your retina. It would allow you to either superimpose the computer generated imagery onto your world or completely detach you to put you in some other places. So you will have an optional use for a headset.

From an augmented reality point of view, as soon as you can overlay data on top of your day-to-day surrounding, that might be quite beneficial. Like you don’t need to look at your phone to find your directions; it is in front of your eyeballs. But also, the interesting part that they think will revolutionize is, if that technology works and if it’s really that size, why do we need any other screen in our lives? No TV’s, no phones, no laptops. Everything will be right in front of your eyes, mapped to the world as you like.

Beyza: How about the collective experience of seeing things together?

Ersin Han: Social VR is everything. And I don’t know how successful they can build this. An average American travels 700 miles to go to a festival, spends hundreds of dollars, which they can’t do and you know more than once or twice a year. But if you can have the feeling of being there by just watching it, feeling it through tactile elements, or you know devices, then you can actually access, communicate and have a collective experience as if you are at a concert from the comfort of your home or your office. So, it’s quite interesting.

Beyza: To play the devil’s advocate, I’ll say sometimes the journey is the experience.

Ersin Han: Yeah, exactly. The interesting thing is when you look at the Agricultural Revolution, the quality of our lives improved in certain ways, but also it became really worse in the sense of connecting with nature and understanding your surroundings or the information of basic survival skills. They all disappeared. But that gave us enough time to study ourselves. You know, build that humongous knowledge of humankind about their surroundings. That gave us enough time not to think about what we are going to eat in the evening but to talk about stars. So, in the same way, maybe if you take the journey out of the recreation, which is the part that you are spending quite a bit of time, it might be a new time that you can spend on something else that will be beneficial for yourself, for humankind overall. You never know.

Beyza: Thank you Ersin.

Ersin Han: Thank you very much.

0 comments